Can AI write a good award entry? Should you use AI to write your award entry?

Chris Robinson

MD, Boost Awards

Last updated 05/02/2026

This article is written by Chris Robinson, MD of Boost Awards

AI-generated award entries are prolific and increasing. According to recent (late-2025) research published by the Independent Awards Standards Council, awards judges and organisers both estimated that about a third of entries (average estimates were 31% and 33% respectively) they had seen in the previous 12 months were AI-generated. This remains unchanged since the 2024 research, although both awards judges and organisers surveyed believe that the proportion of award entries being AI-generated will increase with time.

It is therefore no surprise that I’m increasingly asked: ‘Why don’t I just use AI to write my award entry?’

Having spent two years exploring this topic with awards industry stakeholders, attending in-person and digital events relating to AI risks and benefits, and helping conduct two pieces of research into AI and awards, I would like to share an undated and in-depth response to this superficially simple question.

This article will summarise the many opportunities and risks associated with using AI for award entries. Please note that, just because Boost is an award entry consultancy, doesn’t necessarily mean we are against AI in its entirety. In this article I will share suggestions about how to use AI well, alongside tips on avoiding pitfalls and risks.

The two first questions

So, you’ve spent the last few months or years rolling out a successful project, product, initiative or strategy, and you want awards judges to hear your story and agree it deserves an award. Should you entrust AI with convincing the judges that you should win?

The first two questions here are… can awards judges spot AI-generated entries? And, secondly, do they care?

Can award judges spot an AI-generated award entry?

In short, yes, usually. According to the 2025 research by the Independent Awards Standards Council (of which I am a member), 71% of judges believe they can spot AI-generated award entries, no substantial change from the 79% in our 2024 research. They say this is due to telltale signs like a ‘lack of soul’, the ‘language and certain phrases’ or ‘bland, generic-sounding copy’ (amongst other indicators). We’ve also heard that they can spot commonalities in language use that you wouldn’t be aware of, but which become apparent when you put award entries side-by-side. As AI becomes better at emulating human writing styles, this might decrease – but for now, most people using AI for award entries seem to be using it in a fairly simplistic way, which is easy to spot.

Do awards judges care?

On the second consideration, it is less clear cut – but there is some good news for AI users.

In short, the answer is ‘enough to consider a careful approach’.

You might argue that judges should just judge an entry on merit and ignore how it was written – and most of the judges taking part in the research clearly share this view. Despite this, about 40% lean towards the increasingly popular adage of ‘if you can’t be bothered to spend time writing this, then why should I be bothered to spend time reading it’. Here are some statistics from the 2025 research…

Firstly, the good news for AI users: A majority (58%) agree ‘I believe that using AI in the award entry process for things like interview transcription and content/data processing is acceptable’. And those who disagree with this, don’t disagree very strongly. In accordance with this, we at Boost use AI to capture call notes, process source material, conduct desk research, and other aspects that help with our overall day job (all within a Cyber Essentials accredited ecosystem for the security reasons we will outline later).

Only half of judges agree ‘I believe that using generative AI to draft the final award entry is acceptable’. And many of those who disagree, disagree strongly.

Secondly, the bad news for those thinking AI can be the primary author: only half agree ‘I believe that using generative AI to draft the final award entry is acceptable’. And many of those who disagree, disagree strongly. Such data is very significant, because your entry will be judged by a panel, and statistically within this panel you will have at least one AI disapprover who is confident they can spot an AI-generated entry. In other words, when it comes to the point where the rubber hits the road – and judges’ eyeballs read the award entry – that is where AI should be kept firmly at arm’s length.

Let’s use more of the research findings to dive into the risks and potential consequences. If a judge gets a strong whiff of gen AI:

- You will create a bad first impression, with a substantial 58% saying they will have less ‘belief that this will be an engaging read’ and 21% admitting to less ‘…willingness to read the entry thoroughly’.

- Most significantly, 42% say they will consciously award less marks!

- 33% believe that there should be explicit consequences – 21% say the entry should be asked to resubmit, and a small but extreme anti-AI minority – 4% (less than the 14% in 2024) think your entry should be disqualified! If you have an AI-generated entry, you had better hope you don’t get one of these judges…

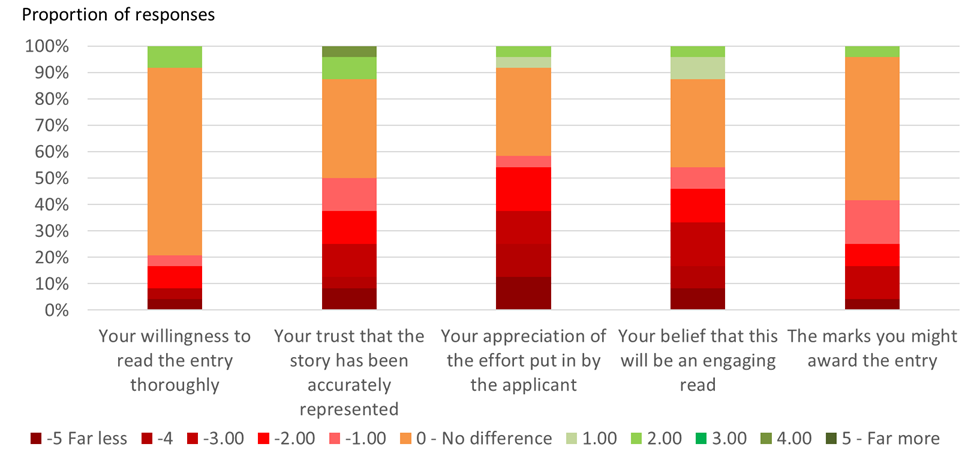

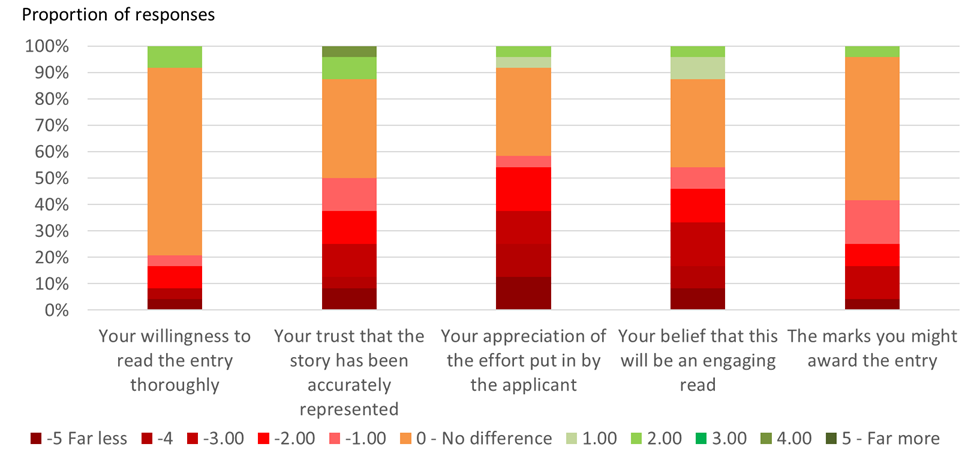

When asked “When you suspect that an award entry has been mostly written using AI, what affect does this have on the following?” many judges are ambivalent (amber), but a significant proportion take a dim view and would mark the entry down (red).

I’ve also spoken personally to a number of awards judges, and it is clear from those conversations that they would prefer to read human-generated content that reflects the passion and authenticity of the person nominating the project/person/team/business. Their bias, whether intentional or not, will naturally favour the human writer when picking the winner.

What is the position of awards organisers?

Surely it’s irrelevant whether an awards organiser cares about submissions being AI generated or not? The organisers aren’t (or shouldn’t be) the judges, after all: but they are the gatekeepers and rule-setters.

Although most awards organisers (about 71%) are fine about AI-generated entries in principle, others are very negative about it. In fact, 14% already check entries to ensure they aren’t overtly AI generated. Some advertise the fact that they will ask these applicants to resubmit. This can be done by using people to spot them, or utilising technology such as GPTZero, Grammarly, and Originality.aI.

For example, the Learning Awards state: ‘Submissions relying solely on AI-generated content will be asked for resubmission.’

This process is likely to increase over time, too – the majority (57%, a similar number to 2024) of awards organisers stated that they would use tech-based filters if their awards platform supported it.

31.3% of awards organisers surveyed in 2024 already filtered out AI-generated award entries

Another example is The International Brilliance Awards, which commented to me: “In our view, AI is fine for improving grammar and clarity, as long as the work is genuine, supported by evidence, and not filled with jargon – what matters most is originality and real impact. We encourage brief disclosure where AI materially shaped the narrative or visuals.”

To conclude this point: you could get AI to generate your award entry, which would be quick, easy and cheap; but there is a very high chance that one of your judges will consciously spend less time reading your entry, and subsequently mark it down. There is also a significant risk of having to resubmit if your content doesn’t pass an AI detection process. You would essentially need to comb over the entire AI-generated draft to ensure it is accurate, reflects your voice and perception of the truth, AND doesn’t read like it is written by gen AI. By writing it from scratch, or using a skilled writer, such potential risk and stress is alleviated.

Third consideration – will AI add value?

From what I’ve seen, the output received by giving an award entry job to AI is not a million miles away from the output received by giving the same job to an intelligent, stringent English Degree graduate, or accomplished journalist. AI will turn it around in seconds for nothing, but within the same ballpark in terms of linguistic quality.

However, having spent literally decades writing award entries and training up colleagues at Boost, I can tell you that award entry writing is far, far more than simply documenting the material provided. You cannot just drop a graduate into a senior consulting job and watch them fly – it takes time to learn some very specific skills and gain the right experience.

For example, finding the right contacts to interview, asking the right questions, pushing back against poor answers, finding a winning angle, deciding the scope of the story, sorting the ‘wow’ from the ‘OK’ in the details, identifying the right evidence points, spotting the gaps, making tough decisions on additional research to conduct, identifying what to cull and what to keep… these are all refined skills that can only come with time and human understanding of context and nuance (aspects often cited as shortcomings of AI).

Analogy time:

Look at it this way. If you gave poor-quality or limited ingredients to a robot cook and said, ‘Bake a cake of this size and shape with these ingredients’, it would do as it was instructed, finding a recipe that could work with the limited ingredients. It would then create a technically perfect cake that would tick the boxes. BUT, would it be good to eat? It would most likely only be as good as the recipe chosen and ingredients provided, because the robot chef can’t taste the cake or challenge the quality of the recipe/ingredients… and as of yet, it cannot challenge the selection of ingredients, or even be bold enough to say ‘this recipe is all I can do here, but it is a poor choice’. The same is true for award entries – they are indeed meant to present the facts. However, the real craft lies in finding the right narrative (a winning recipe), interviewing the right people, and hunting down the right data points (the ingredients).

So, sticking with this analogy – imagine you have the best ingredients, as in, all the right content: facilitated interview transcripts, source documents, meaningful results/data, etc. Such trustworthy, high-quality information puts you in a much stronger position to prepare an entry – whether using a human or AI. However, at this point you have already done much of the heavy lifting. A thorough information gathering exercise should put you in a really good place to write an award entry. And yes, this is the most appropriate place for AI to step in.

I heard a very relevant comment from another business owner recently that applies here: ‘AI will take you from 0% to 70% in seconds, but it will also take you from 100%-70% in seconds.’ In other words, give AI the right raw content and it will get you most of the way there rapidly, but the output will still need significant additional work to make it award winning. As we have already pointed out, rewriting AI content to feel more human and double checking it has interpreted every nuance of the story and data correctly is very often a time-consuming task – and there is no crumb trail for the thinking. Given this is the critical stage in the process, why waste all the hard work in gathering the content by taking a shortcut when converting it into an entry? By all means process the content to speed up the process, but keep the voice and narrator human.

Next consideration – can you trust AI to be accurate?

One change year on year is an increase in the degree to which awards judges and organisers trust AI to be faithful to the truth, but generative AI still relies on maths, source data you supply, and deep pools of other data to decide which words follow on from previous words. Each word choice is based on probability. It cannot tell if it is accidentally missing the context, sharing a bias, or misinterpreting the source material. It often takes itself down blind alleys, and (especially if doing its own research) too often generates complete nonsense – referred to as ‘hallucinating’. The worst example here is asking AI to research the rules and details of an awards programme – we find for this it comes up with the rules for previous years, other programmes by the same organisers, and information about similar sounding awards.

This could lead to potentially embarrassing situations when you get your entry reviewed and approved. For this reason, you should double-check every fact and stat, often having to go back to the source material to be entirely sure you have included all the facts and represented them accurately. In truth, you might as well do it yourself, or get a person or agency you trust to do it for you.

As The University of Cambridge website so eloquently puts it: ‘Oops! We Automated Bullshit: ChatGPT is a bullshit generator’. Marc North of Durham University reiterated this sentiment at a recent conference I attended, remarking: ‘AI has no desire or requirement to tell the truth’.

Furthermore, if an awards judge has had an experience of AI hallucinating or getting it wrong, they will then harbour an ingrained bias against AI content.

Another consideration – can it do harm?

With any business decision, you have to ask yourself: ‘What’s the worst that can happen?’ Well, if you thought the worst that can happen in this context is that you get disqualified from an awards programme, there actually is worse.

Firstly, if you are using AI without declaring it, a common issue is that if people ask: ‘Why did you write it like that?’ or ‘Why did you include this and not that?’, you will have no clue. You cannot go back to the AI and justify its historic decisions. What we keep finding is that it chooses waffle over data points, even when asked not to. It will often ignore the most important points to include, and you’ll consequently be made to look bad by not having good answers to challenges about editorial/narrative decisions. Then you have no choice but to admit you used AI; and that’s the last time you and your preferred AI tool will be asked to do that task.

For another thing, AI uses previous content to generate new content – and this can mean your supposedly new content might reflect unintentional bias or prejudices. For example, images are being criticised as overly sexualised, and assumptions about gender and race in society often creep into content.

Even worse, if you don’t use an enterprise AI licence, then everything you process is in essence the sharing of potentially confidential content with a third party. If you are under a privacy policy or non-disclosure agreement, then the act of using the online Chat GPT or equivalent is a clear breach of any policy, and the content you shared is going to be stored on a third-party server and used to train up future content.

For this reason, if processing client-confidential information using AI, we at Boost use our Cyber Essentials accredited enterprise platform, so all the information remains within our estate. If you use a less secure agency or freelance writer, then your information may well be at greater risk.

And finally – and this is improving over time, but still worth a mention – is the subject of intellectual property (IPR). OpenAI now says that content created using AI is owned by the user. However, it might create content using someone’s else’s intellectual property, and you might find yourself accidentally infringing upon someone else’s copyright (multiple lawsuits are currently going through the courts), with your final content belonging to… who knows! I asked a panel of AI experts, ‘If I use AI to write an award entry, who owns the IPR of the final content?’ and no one had a clear answer. In this respect, it is still very much the Wild West.

The final consideration – will it win?

If you are writing a press release or case study, then an average piece of content is arguably good enough. But if you are up against the best in the industry, your entry has to make your story sound worthiest of winning – and that is not easy. It isn’t enough to ask, ‘Can AI write an award entry?’ You have to ask: ‘Can AI write an award-winning entry?’ The answer to the first question is clearly a ‘yes’, but the answer to the second question, taking everything we have explored here into account, is ‘unlikely’. On top of this, I would argue that this will become increasingly less likely as more and more people start using AI to generate their run-of-the-mill award entries, and AI-generated award entries becomes the baseline – those 50-70% of entries that the judge is most comfortable leaving out of the shortlist or winners roster. I predict that we will then revert back to bringing humans into the mix more, this time to steal a march on the bots.

Conclusion

To summarise the research findings – the good news is that using AI to process the information you use in your entry makes sense and is mostly supported by judges. However, letting AI be the voice and primary author of your submission is very risky; awards judges have become adept at spotting AI-generated entries, and a majority will think less favourably of the entry, with about 40% consciously marking your entry down.

Even if judges don’t mark an AI-generated entry down, there is an increased risk that the entry itself, although grammatically correct and within word limits, will not do your story the same justice as when using an experienced award entry writer. It is only as good as the content provided: a bot cannot do an intelligent interview, find the best angle, and push back against inadequate content. Furthermore, the risk of being filtered by AI-spotting technology is increasing, with awards organisers mostly supporting this – and, when spotted, there is an increasing chance of you being asked to rapidly rewrite your entry and resubmit.

So, when creating an award entry, decrease the workload with AI, but only do so in a way that actually increases your chances of walking away from the awards ceremony as the winner.

Boost – a helping hand entering awards

Boost is the world’s first and largest award entry consultancy, having helped clients, from SMEs to multinationals, win over 2,000 credible business awards. Increase your chances of success significantly – call Boost on +44(0)1273 258703 today for a no-obligation chat about awards.

(C) This article was written by Chris Robinson and is the intellectual property of award entry consultants Boost Awards.

Can AI write a good award entry? Should you use AI to write your award entry?

Chris Robinson

MD, Boost Awards

Last updated 05/02/2026

This article is written by Chris Robinson, MD of Boost Awards

AI-generated award entries are prolific and increasing. According to recent (late-2025) research published by the Independent Awards Standards Council, awards judges and organisers both estimated that about a third of entries (average estimates were 31% and 33% respectively) they had seen in the previous 12 months were AI-generated. This remains unchanged since the 2024 research, although both awards judges and organisers surveyed believe that the proportion of award entries being AI-generated will increase with time.

It is therefore no surprise that I’m increasingly asked: ‘Why don’t I just use AI to write my award entry?’

Having spent two years exploring this topic with awards industry stakeholders, attending in-person and digital events relating to AI risks and benefits, and helping conduct two pieces of research into AI and awards, I would like to share an undated and in-depth response to this superficially simple question.

This article will summarise the many opportunities and risks associated with using AI for award entries. Please note that, just because Boost is an award entry consultancy, doesn’t necessarily mean we are against AI in its entirety. In this article I will share suggestions about how to use AI well, alongside tips on avoiding pitfalls and risks.

The two first questions

So, you’ve spent the last few months or years rolling out a successful project, product, initiative or strategy, and you want awards judges to hear your story and agree it deserves an award. Should you entrust AI with convincing the judges that you should win?

The first two questions here are… can awards judges spot AI-generated entries? And, secondly, do they care?

Can award judges spot an AI-generated award entry?

In short, yes, usually. According to the 2025 research by the Independent Awards Standards Council (of which I am a member), 71% of judges believe they can spot AI-generated award entries, no substantial change from the 79% in our 2024 research. They say this is due to telltale signs like a ‘lack of soul’, the ‘language and certain phrases’ or ‘bland, generic-sounding copy’ (amongst other indicators). We’ve also heard that they can spot commonalities in language use that you wouldn’t be aware of, but which become apparent when you put award entries side-by-side. As AI becomes better at emulating human writing styles, this might decrease – but for now, most people using AI for award entries seem to be using it in a fairly simplistic way, which is easy to spot.

Do awards judges care?

On the second consideration, it is less clear cut – but there is some good news for AI users.

In short, the answer is ‘enough to consider a careful approach’.

You might argue that judges should just judge an entry on merit and ignore how it was written – and most of the judges taking part in the research clearly share this view. Despite this, about 40% lean towards the increasingly popular adage of ‘if you can’t be bothered to spend time writing this, then why should I be bothered to spend time reading it’. Here are some statistics from the 2025 research…

Firstly, the good news for AI users: A majority (58%) agree ‘I believe that using AI in the award entry process for things like interview transcription and content/data processing is acceptable’. And those who disagree with this, don’t disagree very strongly. In accordance with this, we at Boost use AI to capture call notes, process source material, conduct desk research, and other aspects that help with our overall day job (all within a Cyber Essentials accredited ecosystem for the security reasons we will outline later).

Only half of judges agree ‘I believe that using generative AI to draft the final award entry is acceptable’. And many of those who disagree, disagree strongly.

Secondly, the bad news for those thinking AI can be the primary author: only half agree ‘I believe that using generative AI to draft the final award entry is acceptable’. And many of those who disagree, disagree strongly. Such data is very significant, because your entry will be judged by a panel, and statistically within this panel you will have at least one AI disapprover who is confident they can spot an AI-generated entry. In other words, when it comes to the point where the rubber hits the road – and judges’ eyeballs read the award entry – that is where AI should be kept firmly at arm’s length.

Let’s use more of the research findings to dive into the risks and potential consequences. If a judge gets a strong whiff of gen AI:

- You will create a bad first impression, with a substantial 58% saying they will have less ‘belief that this will be an engaging read’ and 21% admitting to less ‘…willingness to read the entry thoroughly’.

- Most significantly, 42% say they will consciously award less marks!

- 33% believe that there should be explicit consequences – 21% say the entry should be asked to resubmit, and a small but extreme anti-AI minority – 4% (less than the 14% in 2024) think your entry should be disqualified! If you have an AI-generated entry, you had better hope you don’t get one of these judges…

When asked “When you suspect that an award entry has been mostly written using AI, what affect does this have on the following?” many judges are ambivalent (amber), but a significant proportion take a dim view and would mark the entry down (red).

I’ve also spoken personally to a number of awards judges, and it is clear from those conversations that they would prefer to read human-generated content that reflects the passion and authenticity of the person nominating the project/person/team/business. Their bias, whether intentional or not, will naturally favour the human writer when picking the winner.

What is the position of awards organisers?

Surely it’s irrelevant whether an awards organiser cares about submissions being AI generated or not? The organisers aren’t (or shouldn’t be) the judges, after all: but they are the gatekeepers and rule-setters.

Although most awards organisers (about 71%) are fine about AI-generated entries in principle, others are very negative about it. In fact, 14% already check entries to ensure they aren’t overtly AI generated. Some advertise the fact that they will ask these applicants to resubmit. This can be done by using people to spot them, or utilising technology such as GPTZero, Grammarly, and Originality.aI.

For example, the Learning Awards state: ‘Submissions relying solely on AI-generated content will be asked for resubmission.’

This process is likely to increase over time, too – the majority (57%, a similar number to 2024) of awards organisers stated that they would use tech-based filters if their awards platform supported it.

31.3% of awards organisers surveyed in 2024 already filtered out AI-generated award entries

Another example is The International Brilliance Awards, which commented to me: “In our view, AI is fine for improving grammar and clarity, as long as the work is genuine, supported by evidence, and not filled with jargon – what matters most is originality and real impact. We encourage brief disclosure where AI materially shaped the narrative or visuals.”

To conclude this point: you could get AI to generate your award entry, which would be quick, easy and cheap; but there is a very high chance that one of your judges will consciously spend less time reading your entry, and subsequently mark it down. There is also a significant risk of having to resubmit if your content doesn’t pass an AI detection process. You would essentially need to comb over the entire AI-generated draft to ensure it is accurate, reflects your voice and perception of the truth, AND doesn’t read like it is written by gen AI. By writing it from scratch, or using a skilled writer, such potential risk and stress is alleviated.

Third consideration – will AI add value?

From what I’ve seen, the output received by giving an award entry job to AI is not a million miles away from the output received by giving the same job to an intelligent, stringent English Degree graduate, or accomplished journalist. AI will turn it around in seconds for nothing, but within the same ballpark in terms of linguistic quality.

However, having spent literally decades writing award entries and training up colleagues at Boost, I can tell you that award entry writing is far, far more than simply documenting the material provided. You cannot just drop a graduate into a senior consulting job and watch them fly – it takes time to learn some very specific skills and gain the right experience.

For example, finding the right contacts to interview, asking the right questions, pushing back against poor answers, finding a winning angle, deciding the scope of the story, sorting the ‘wow’ from the ‘OK’ in the details, identifying the right evidence points, spotting the gaps, making tough decisions on additional research to conduct, identifying what to cull and what to keep… these are all refined skills that can only come with time and human understanding of context and nuance (aspects often cited as shortcomings of AI).

Analogy time:

Look at it this way. If you gave poor-quality or limited ingredients to a robot cook and said, ‘Bake a cake of this size and shape with these ingredients’, it would do as it was instructed, finding a recipe that could work with the limited ingredients. It would then create a technically perfect cake that would tick the boxes. BUT, would it be good to eat? It would most likely only be as good as the recipe chosen and ingredients provided, because the robot chef can’t taste the cake or challenge the quality of the recipe/ingredients… and as of yet, it cannot challenge the selection of ingredients, or even be bold enough to say ‘this recipe is all I can do here, but it is a poor choice’. The same is true for award entries – they are indeed meant to present the facts. However, the real craft lies in finding the right narrative (a winning recipe), interviewing the right people, and hunting down the right data points (the ingredients).

So, sticking with this analogy – imagine you have the best ingredients, as in, all the right content: facilitated interview transcripts, source documents, meaningful results/data, etc. Such trustworthy, high-quality information puts you in a much stronger position to prepare an entry – whether using a human or AI. However, at this point you have already done much of the heavy lifting. A thorough information gathering exercise should put you in a really good place to write an award entry. And yes, this is the most appropriate place for AI to step in.

I heard a very relevant comment from another business owner recently that applies here: ‘AI will take you from 0% to 70% in seconds, but it will also take you from 100%-70% in seconds.’ In other words, give AI the right raw content and it will get you most of the way there rapidly, but the output will still need significant additional work to make it award winning. As we have already pointed out, rewriting AI content to feel more human and double checking it has interpreted every nuance of the story and data correctly is very often a time-consuming task – and there is no crumb trail for the thinking. Given this is the critical stage in the process, why waste all the hard work in gathering the content by taking a shortcut when converting it into an entry? By all means process the content to speed up the process, but keep the voice and narrator human.

Next consideration – can you trust AI to be accurate?

One change year on year is an increase in the degree to which awards judges and organisers trust AI to be faithful to the truth, but generative AI still relies on maths, source data you supply, and deep pools of other data to decide which words follow on from previous words. Each word choice is based on probability. It cannot tell if it is accidentally missing the context, sharing a bias, or misinterpreting the source material. It often takes itself down blind alleys, and (especially if doing its own research) too often generates complete nonsense – referred to as ‘hallucinating’. The worst example here is asking AI to research the rules and details of an awards programme – we find for this it comes up with the rules for previous years, other programmes by the same organisers, and information about similar sounding awards.

This could lead to potentially embarrassing situations when you get your entry reviewed and approved. For this reason, you should double-check every fact and stat, often having to go back to the source material to be entirely sure you have included all the facts and represented them accurately. In truth, you might as well do it yourself, or get a person or agency you trust to do it for you.

As The University of Cambridge website so eloquently puts it: ‘Oops! We Automated Bullshit: ChatGPT is a bullshit generator’. Marc North of Durham University reiterated this sentiment at a recent conference I attended, remarking: ‘AI has no desire or requirement to tell the truth’.

Furthermore, if an awards judge has had an experience of AI hallucinating or getting it wrong, they will then harbour an ingrained bias against AI content.

Another consideration – can it do harm?

With any business decision, you have to ask yourself: ‘What’s the worst that can happen?’ Well, if you thought the worst that can happen in this context is that you get disqualified from an awards programme, there actually is worse.

Firstly, if you are using AI without declaring it, a common issue is that if people ask: ‘Why did you write it like that?’ or ‘Why did you include this and not that?’, you will have no clue. You cannot go back to the AI and justify its historic decisions. What we keep finding is that it chooses waffle over data points, even when asked not to. It will often ignore the most important points to include, and you’ll consequently be made to look bad by not having good answers to challenges about editorial/narrative decisions. Then you have no choice but to admit you used AI; and that’s the last time you and your preferred AI tool will be asked to do that task.

For another thing, AI uses previous content to generate new content – and this can mean your supposedly new content might reflect unintentional bias or prejudices. For example, images are being criticised as overly sexualised, and assumptions about gender and race in society often creep into content.

Even worse, if you don’t use an enterprise AI licence, then everything you process is in essence the sharing of potentially confidential content with a third party. If you are under a privacy policy or non-disclosure agreement, then the act of using the online Chat GPT or equivalent is a clear breach of any policy, and the content you shared is going to be stored on a third-party server and used to train up future content.

For this reason, if processing client-confidential information using AI, we at Boost use our Cyber Essentials accredited enterprise platform, so all the information remains within our estate. If you use a less secure agency or freelance writer, then your information may well be at greater risk.

And finally – and this is improving over time, but still worth a mention – is the subject of intellectual property (IPR). OpenAI now says that content created using AI is owned by the user. However, it might create content using someone’s else’s intellectual property, and you might find yourself accidentally infringing upon someone else’s copyright (multiple lawsuits are currently going through the courts), with your final content belonging to… who knows! I asked a panel of AI experts, ‘If I use AI to write an award entry, who owns the IPR of the final content?’ and no one had a clear answer. In this respect, it is still very much the Wild West.

The final consideration – will it win?

If you are writing a press release or case study, then an average piece of content is arguably good enough. But if you are up against the best in the industry, your entry has to make your story sound worthiest of winning – and that is not easy. It isn’t enough to ask, ‘Can AI write an award entry?’ You have to ask: ‘Can AI write an award-winning entry?’ The answer to the first question is clearly a ‘yes’, but the answer to the second question, taking everything we have explored here into account, is ‘unlikely’. On top of this, I would argue that this will become increasingly less likely as more and more people start using AI to generate their run-of-the-mill award entries, and AI-generated award entries becomes the baseline – those 50-70% of entries that the judge is most comfortable leaving out of the shortlist or winners roster. I predict that we will then revert back to bringing humans into the mix more, this time to steal a march on the bots.

Conclusion

To summarise the research findings – the good news is that using AI to process the information you use in your entry makes sense and is mostly supported by judges. However, letting AI be the voice and primary author of your submission is very risky; awards judges have become adept at spotting AI-generated entries, and a majority will think less favourably of the entry, with about 40% consciously marking your entry down.

Even if judges don’t mark an AI-generated entry down, there is an increased risk that the entry itself, although grammatically correct and within word limits, will not do your story the same justice as when using an experienced award entry writer. It is only as good as the content provided: a bot cannot do an intelligent interview, find the best angle, and push back against inadequate content. Furthermore, the risk of being filtered by AI-spotting technology is increasing, with awards organisers mostly supporting this – and, when spotted, there is an increasing chance of you being asked to rapidly rewrite your entry and resubmit.

So, when creating an award entry, decrease the workload with AI, but only do so in a way that actually increases your chances of walking away from the awards ceremony as the winner.

Boost – a helping hand entering awards

Boost is the world’s first and largest award entry consultancy, having helped clients, from SMEs to multinationals, win over 2,000 credible business awards. Increase your chances of success significantly – call Boost on +44(0)1273 258703 today for a no-obligation chat about awards.

(C) This article was written by Chris Robinson and is the intellectual property of award entry consultants Boost Awards.

Looking for awards to enter?

Sign up for our free email deadline reminders to make sure you never miss an awards deadline. Every month you will receive a comprehensive list of upcoming awards deadlines (in the next two months) organised by industry sector.